Ingest HiBob data into Port via Airbyte, S3 & webhook

This guide will demonstrate how to ingest HiBob data into Port using Airbyte, S3 and a webhook integration.

S3 integrations lack some of the features (such as reconciliation) found in Ocean or other Port integration solutions.

As a result, if a record ingested during the initial sync is later deleted in the data source, there’s no automatic mechanism to remove it from Port. The record simply won’t appear in future syncs, but it will remain in Port indefinitely.

If the data includes a flag for deleted records (e.g., is_deleted: "true"), you can configure a webhook delete operation in your webhook’s mapping configuration to remove these records from Port automatically.

Prerequisites

-

Ensure you have a Port account and have completed the onboarding process.

-

This feature is part of Port's limited-access offering. To obtain the required S3 bucket, please contact our team directly via chat, Slack, or e-mail, and we will create and manage the bucket on your behalf.

-

Access to an available Airbyte app (can be cloud or self-hosted) - for reference, follow the quick start guide.

Very short Quickstart Guide for macOS

-

Download and Install Docker Desktop

-

Install abctl:

curl -LsfS https://get.airbyte.com | bash -

- Install Airbyte locally:

abctl local install

The application will be available by default in http://localhost:8000/

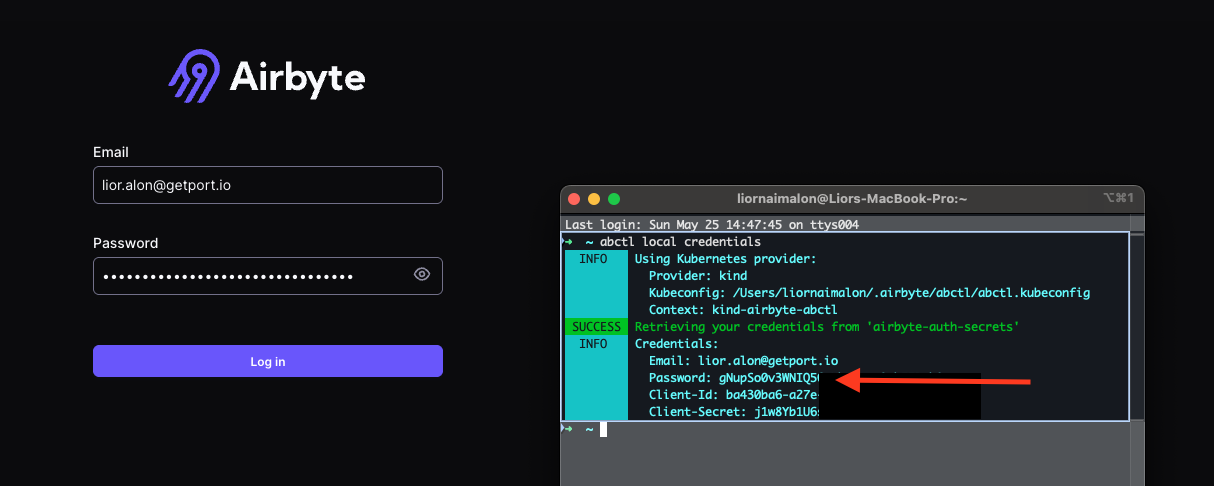

You can find your password by typing in a terminal (see screenshot):

abctl local credentials

- Setup a Hibob API service user - Hibob Guide.

Data model setup

Create blueprints

Create the Hibob Payroll blueprint:

-

Go to the Builder page of your portal.

-

Click on "+ Blueprint".

-

Click on the

{...}button in the top right corner, and choose "Edit JSON". -

Paste the following JSON schema into the editor:

Hibob Payroll (Click to expand)

{

"identifier": "hibob_payroll",

"description": "Represents an employee record.",

"title": "Hibob Payroll",

"icon": "Service",

"schema": {

"properties": {

"creationdate": {

"type": "string",

"format": "date-time"

},

"firstname": {

"type": "string"

},

"avatarurl": {

"type": "string",

"format": "url"

},

"companyid": {

"type": "string"

},

"surname": {

"type": "string"

},

"state": {

"type": "string"

},

"email": {

"type": "string",

"format": "email"

},

"creationdatetime": {

"type": "string",

"format": "date-time"

},

"coverimageurl": {

"type": "string",

"format": "url"

},

"fullname": {

"type": "string"

}

},

"required": []

},

"mirrorProperties": {},

"calculationProperties": {},

"aggregationProperties": {},

"relations": {}

}

Create the Hibob Profile blueprint in the same way:

Hibob Profile (Click to expand)

{

"identifier": "hibob_profile",

"description": "Represents an employee record.",

"title": "Hibob Profile",

"icon": "User",

"schema": {

"properties": {

"companyid": {

"type": "string"

},

"firstname": {

"type": "string"

},

"surname": {

"type": "string"

},

"email": {

"type": "string",

"format": "email"

},

"is_manager": {

"type": "boolean",

"title": "is_manager"

},

"duration_of_employment": {

"type": "string",

"title": "duration_of_employment"

}

},

"required": []

},

"mirrorProperties": {},

"calculationProperties": {},

"aggregationProperties": {},

"relations": {

"payroll": {

"title": "Payroll",

"target": "hibob_payroll",

"required": false,

"many": false

}

}

}

Create Webhook Integration

Create a webhook integration to ingest the data into Port:

-

Go to the Data sources page of your portal.

-

Click on "+ Data source".

-

In the top selection bar, click on Webhook, then select

Custom Integration. -

Enter a name for your Integration (for example: "Hibob Integration"), enter a description (optional), then click on

Next. -

Copy the Webhook URL that was generated and include set up the airbyte connection (see Below).

-

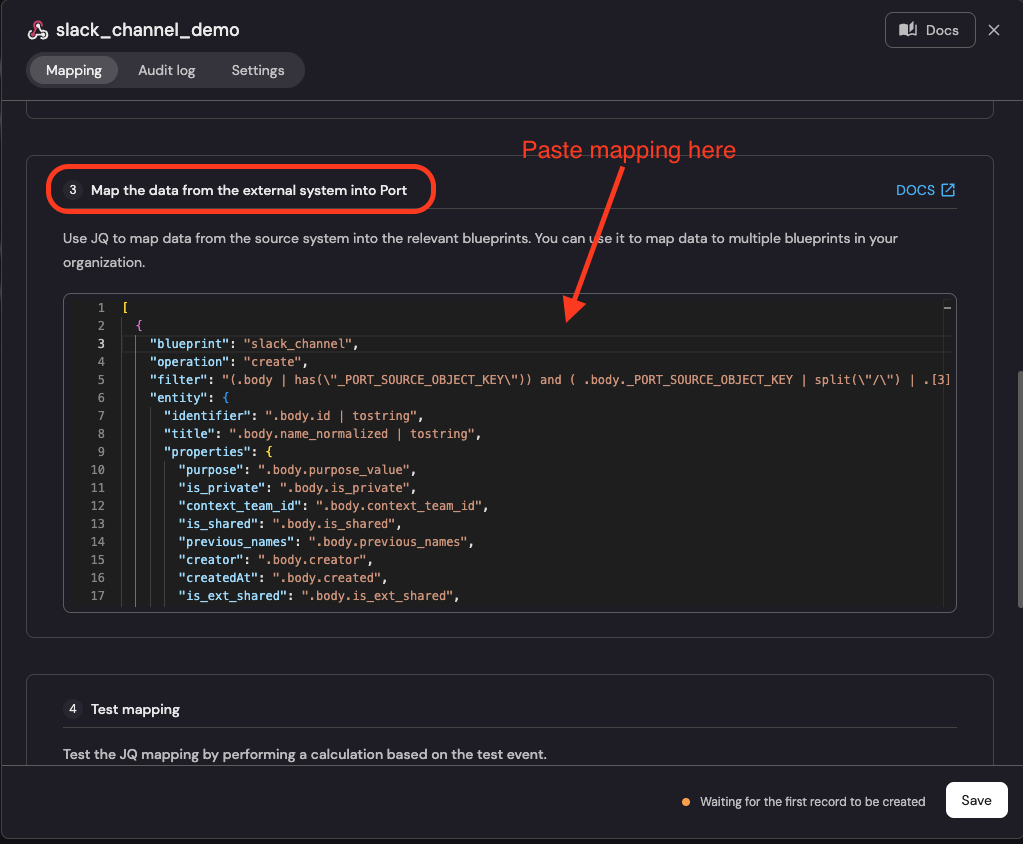

Scroll down to the section titled "Map the data from the external system into Port" and paste the following mapping:

Hibob Webhook Mapping (Click to expand)

[

{

"blueprint": "hibob_payroll",

"operation": "create",

"filter": "(.body | has(\"_PORT_SOURCE_OBJECT_KEY\")) and (.body._PORT_SOURCE_OBJECT_KEY | split(\"/\") | .[2] | IN(\"payroll\"))",

"entity": {

"identifier": ".body.id",

"title": ".body.displayName",

"properties": {

"creationdate": "(.body.creationDate? // null) | if type == \"string\" then strptime(\"%Y-%m-%d\") | strftime(\"%Y-%m-%dT%H:%M:%SZ\") else null end",

"firstname": ".body.firstName",

"avatarurl": ".body.avatarUrl",

"companyid": ".body.companyId",

"surname": ".body.surname",

"state": ".body.state",

"email": ".body.email",

"creationdatetime": ".body.creationDatetime",

"coverimageurl": ".body.coverImageUrl",

"fullname": ".body.fullName"

}

}

},

{

"blueprint": "hibob_profile",

"operation": "create",

"filter": "(.body | has(\"_PORT_SOURCE_OBJECT_KEY\")) and (.body._PORT_SOURCE_OBJECT_KEY | split(\"/\") | .[2] | IN(\"profiles\"))",

"entity": {

"identifier": ".body.id",

"title": ".body.displayName",

"properties": {

"companyid": ".body.companyId",

"firstname": ".body.firstName",

"is_manager": ".body.work.isManager",

"duration_of_employment": ".body.work.durationOfEmployment.humanize",

"surname": ".body.surname",

"email": ".body.email"

},

"relations": {

"payroll": ".body.id"

}

}

}

]

Airbyte Setup

Set up S3 Destination

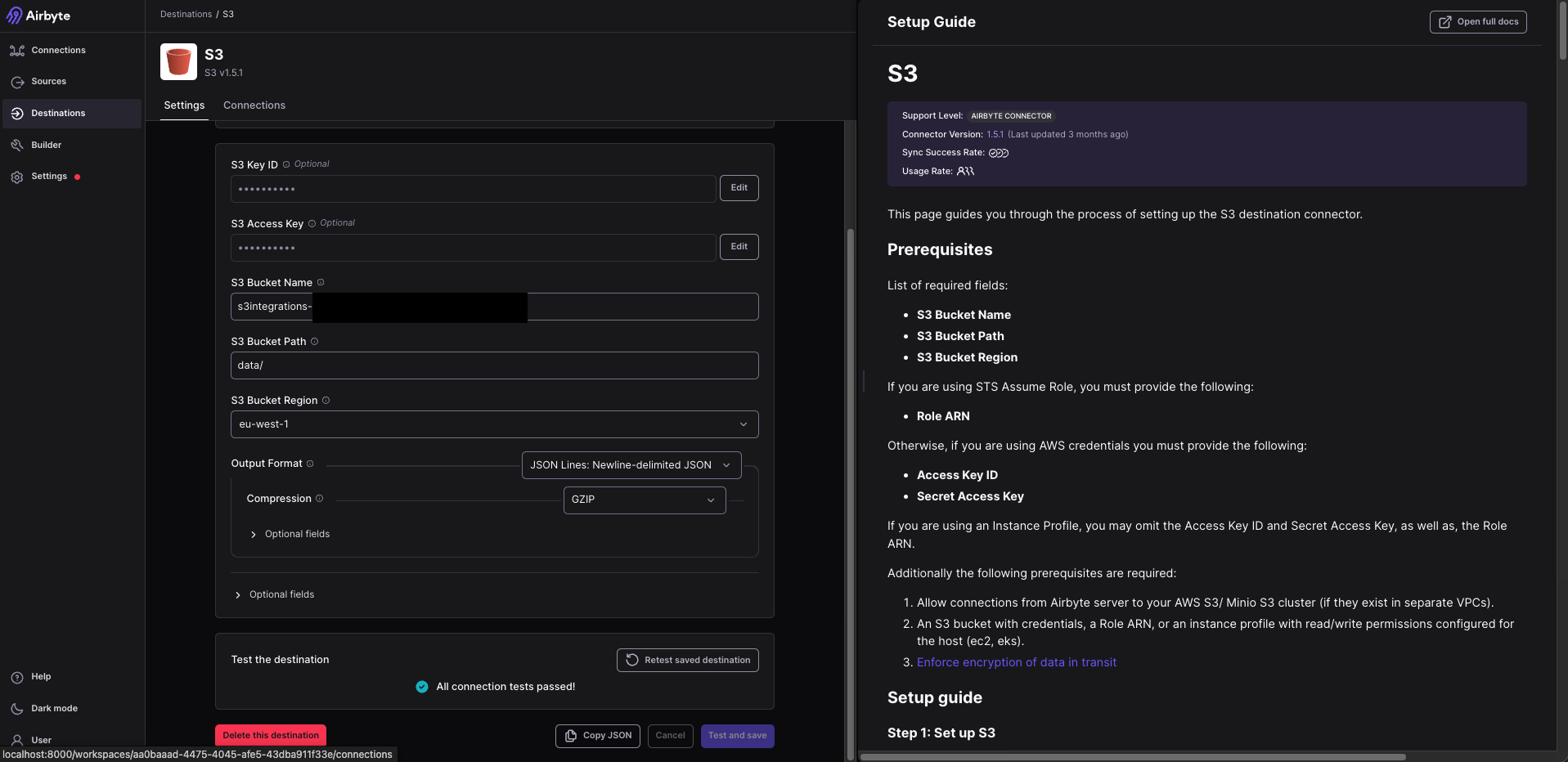

If you haven't already set up S3 Destination for Port S3, follow these steps:

- UI

- Terraform

-

Login to your Airbyte application (cloud or self-hosted).

-

In the left-side pane, click on

Destinations. -

Click on

+ New Destination. -

Input the S3 Credentials provided to you by Port:

- Under S3 Key ID enter your S3 Access Key ID.

- Under S3 Access Key enter your S3 Access Key Secret.

- Under S3 Bucket Name enter the bucket name (example: "org-xxx").

- Under S3 Bucket Path enter "data/".

- Under S3 Bucket Region enter the appropriate region.

- For output format, choose "JSON Lines: Newline-delimited JSON".

- For compression, choose "GZIP".

- Under Optional Fields, enter the following in S3 Path Format:

${NAMESPACE}/${STREAM_NAME}/year=${YEAR}/month=${MONTH}/${DAY}_${EPOCH}_

-

Click

Test and saveand wait for Airbyte to confirm the Destination is set up correctly.

terraform {

required_providers {

airbyte = {

source = "airbytehq/airbyte"

version = "0.6.5"

}

}

}

provider "airbyte" {

username = "<AIRBYTE_USERNAME>"

password = "<AIRBYTE_PASSWORD>"

server_url = "<AIRBYTE_API_URL>"

}

resource "airbyte_destination_s3" "port-s3-destination" {

configuration = {

access_key_id = "<S3_ACCESS_KEY>"

secret_access_key = "<S3_SECRET_KEY>"

s3_bucket_region = "<S3_REGION>"

s3_bucket_name = "<S3_BUCKET>"

s3_bucket_path = "data/"

format = {

json_lines_newline_delimited_json = {

compression = { gzip = {} }

format_type = "JSONL"

}

}

s3_path_format = `$${NAMESPACE}/$${STREAM_NAME}/year=$${YEAR}/month=$${MONTH}/$${DAY}_$${EPOCH}_`

destination_type = "s3"

}

name = "port-s3-destination"

workspace_id = var.workspace_id

}

variable "workspace_id" {

default = "<AIRBYTE_WORKSPACE_ID>"

}

Set up Hibob Connection

-

Follow Airbyte's guide to set up Hibob connector. More information on setting source connectors

-

After the Source is set up, proceed to create a "+ New Connection".

-

For Source, choose the Hibob source you have set up.

-

For Destination, choose the S3 Destination you have set up.

-

In the Select Streams step, make sure only "payroll" and "profiles" are marked for synchronization.

-

In the Configuration step, under Destination Namespace, choose "Custom Format" and enter the Webhook URL you have copied when setting up the webhook, for example: "wSLvwtI1LFwQzXXX".

-

Click on Finish & Sync to apply and start the Integration process!

If for any reason you have entered different values than the ones specified in this guide, inform us so we can assist to ensure the integration will run smoothly.