Interact with AI agents

This feature is currently in closed beta with limited availability. Access is provided on an application basis.

To request access, please reach out to us by filling this form.

Getting started

Once you've built your AI agents, it's time to interact with them. Port provides several ways to communicate with your AI agents.

Interaction options

You have two main approaches when interacting with AI agents in Port:

- Specific agent

- Agent router

Choose a specific agent when you have a structured scenario, such as triggering an agent from an automation or using an AI widget. This approach works best when you know exactly which agent has the expertise needed for your task, or when the interaction method requires you to select a specific agent.

The agent router is used when you prefer a more conversational interaction, or when the interaction method doesn't allow for selecting a specific agent directly. The router intelligently determines which agent is best suited to handle your request based on its content and context. This is the default for interactions via Slack, unless a specific agent is targeted. For API and action-based interactions, you can choose to direct your request to the agent router.

Interaction methods

- Widget

- Slack Integration

- Actions and automations

- API integration

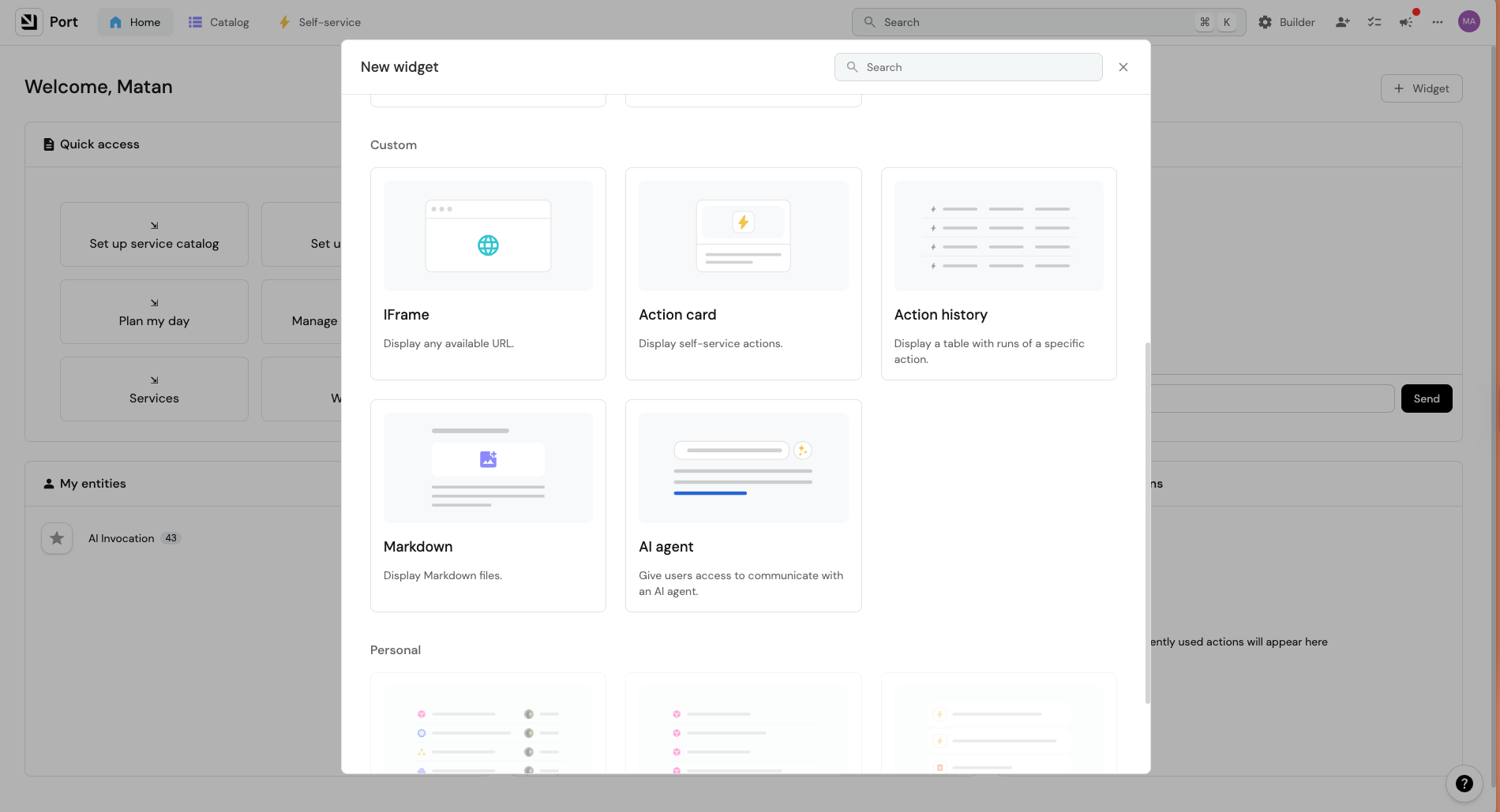

You can add AI agents directly to your dashboards as interactive widgets, providing easy access to their capabilities right where you need them.

Follow these steps to add an AI agent widget:

- Go to a dashboard.

- Click on

+ Widget. - Select the

AI Agent. - Choose the agent and position it in the widget grid.

The widget provides a chat interface where you can ask questions and receive responses from the specific agent you configured without leaving your dashboard.

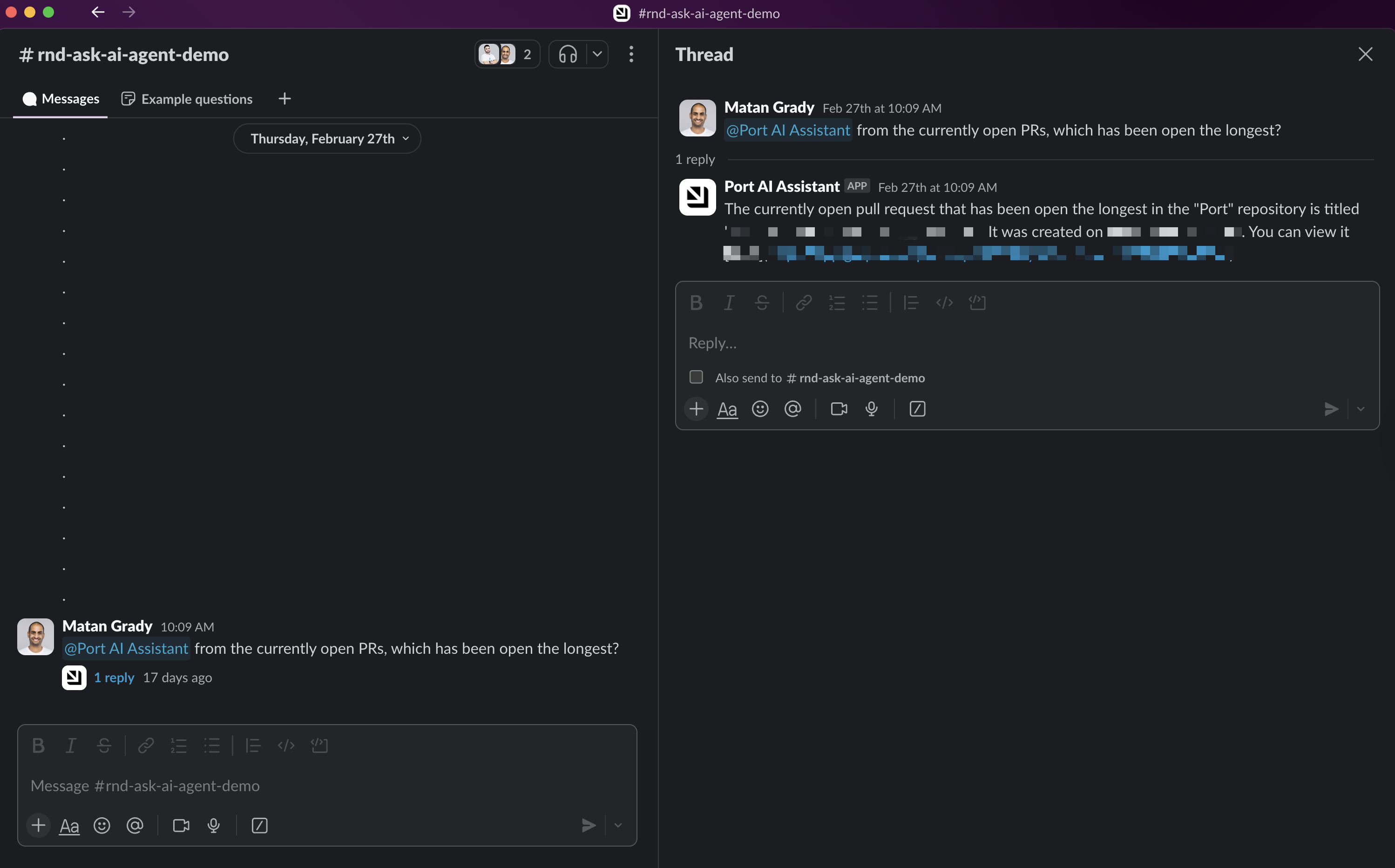

The Slack integration provides the most natural way to interact with Port's AI agents. This method abstracts all technical details, allowing for free-flowing conversations. By default, messages sent to the Port Slack app (either via direct message or by mentioning it in a channel) are handled by the agent router.

You can interact with agents in two ways:

- Direct messaging the Port Slack app. This will use the agent router.

- Mentioning the app in any channel it's invited to. This will also use the agent router.

When you send a message, the app will:

- Open a thread.

- Respond with the agent's answer.

Tips for effective Slack interactions

- To target a specific agent instead of using the router, include the agent's nickname at the beginning of your message (e.g., "@Port DevAgent what are our production services?").

- Send follow-up messages in the same thread and mention the app again to continue the conversation.

- Keep conversations focused on the same topic for best results.

- Limit threads to five consecutive messages for optimal performance.

- For best results, start new threads for new topics or questions.

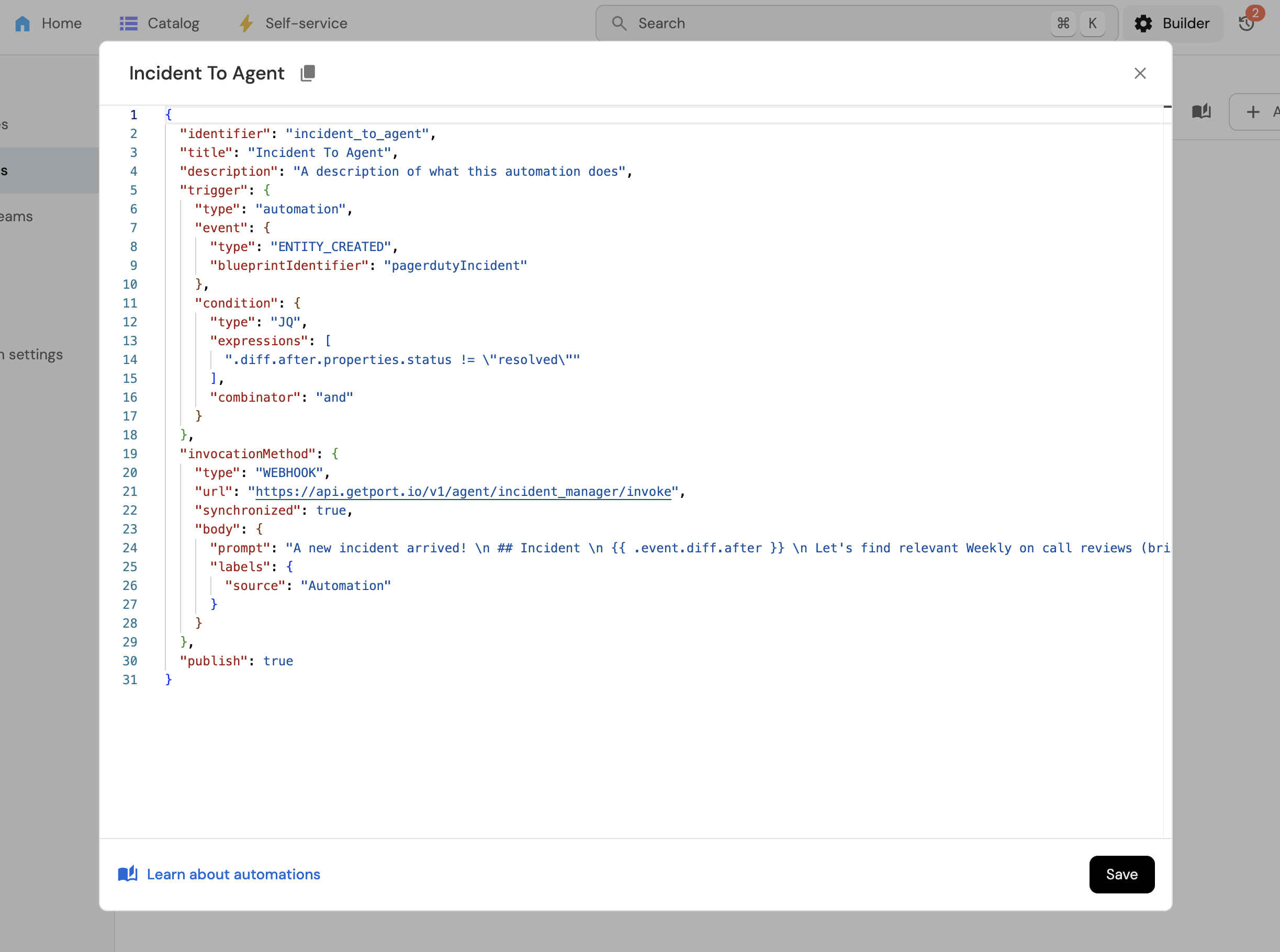

You can trigger AI agents through Port's actions and automations, allowing you to integrate AI capabilities into your existing workflows. When configuring an action or automation, you can choose to invoke a specific agent or send the request to the agent router.

For example, when a new incident is created in Port, you can trigger an agent that:

- Triages the incident.

- Summarizes relevant information.

- Sends a notification to Slack.

Port is an API-first platform, allowing you to integrate AI agents into your custom workflows. You can interact with agents in two main ways: by streaming responses as Server-Sent Events (SSE) for real-time updates, or by polling for a complete response.

- Streaming (Recommended)

- Polling

Streaming Responses (Recommended)

Streaming allows you to receive parts of the agent's response as they are generated, providing a more interactive experience. This is achieved by adding the stream=true query parameter to the invoke API call (see Invoke a specific agent API or Invoke an agent API). The response will be in text/event-stream format.

Interaction Process (Streaming):

- Invoke the agent with the

stream=trueparameter. - The API will start sending Server-Sent Events.

- Your client should process these events as they arrive. Each event provides a piece of information about the agent's progress or the final response.

cURL Example for Streaming:

The following example shows how to invoke a specific agent, but the router agent be similarly used as well.

curl 'https://api.port.io/v1/agent/<AGENT_IDENTIFIER>/invoke?stream=true' \\

-H 'Authorization: Bearer <YOUR_API_TOKEN>' \\

-H 'Content-Type: application/json' \\

--data-raw '{"prompt":"What is my next task?"}'

Streaming Response Details (Server-Sent Events):

The API will respond with Content-Type: text/event-stream; charset=utf-8.

Each event in the stream has the following format:

event: <event_name>

data: <json_payload_or_string>

Note the blank line after data: ... which separates events.

Here's an example sequence of events:

event: plan

data: { "plan": "...", "toolCalls": [...] }

event: execution

data: Your final answer from the agent.

event: done

data: {}

Possible Event Types: Provides the result from the agent router. Indicates which self-service action, if any, the agent has decided to run. Details the agent's reasoning and intended steps (tools to be called, etc.). The final textual answer or a chunk of the answer from the agent for the user. For longer responses, multiple Signals that the agent has finished processing and the response stream is complete.agentSelection (Click to expand){

"type": "SELECTED_AGENT",

"identifier": "agent_id",

"thought_process": "Why this agent was selected..."

}

type can also be "NO_AGENT_MATCH" if no suitable agent is found.checkIfActionRequested (Click to expand){

"actionIdentifier": "action_id"

}

{"actionIdentifier": null} if no action is requested.plan (Click to expand){

"plan": "Detailed plan...",

"toolCalls": [

{

"name": "tool_name",

"arguments": {}

}

]

}execution (Click to expand)execution events might be sent.done (Click to expand){}

Polling for Responses

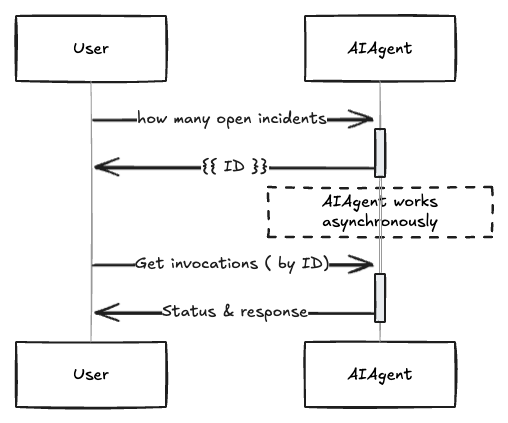

If you prefer to get the entire response at once after processing is complete, or if your client doesn't support streaming, you can use the polling method.

- Invoke the agent (see Invoke a specific agent API) or agent router (see Invoke an agent API) with your request (without

stream=true). - Receive an

invocationIdin the response. - Periodically poll the AI invocation endpoint (see Get an invocation's result API) using the

invocationIduntil thestatusfield indicates completion (e.g.,CompletedorFailed). - The final response will be available in the polled data once completed.

Discovering Available Agents

AI agents are standard Port entities belonging to the _ai_agent blueprint. This means you can query, manage, and interact with them using the same API endpoints and methods you use for any other entity in your software catalog.

You can discover available AI agents in your Port environment in a couple of ways:

- AI Agents Catalog Page: Navigate to the AI Agents catalog page in Port. This page lists all the agents that have been created in your organization. For more details on creating agents, refer to the Build an AI agent guide.

- Via API: Programmatically retrieve a list of all AI agents using the Port API. AI agents are entities of the

_ai_agentblueprint. You can use the Get all entities of a blueprint API endpoint to fetch them, specifying_ai_agentas the blueprint identifier.

cURL Example

curl -L 'https://api.port.io/v1/blueprints/_ai_agent/entities' \

-H 'Accept: application/json' \

-H 'Authorization: Bearer <YOUR_API_TOKEN>'

AI interaction details

Every AI agent interaction creates an entity in Port, allowing you to track and analyze the interaction. This information helps you understand how the agent processed your request and identify opportunities for improvement.

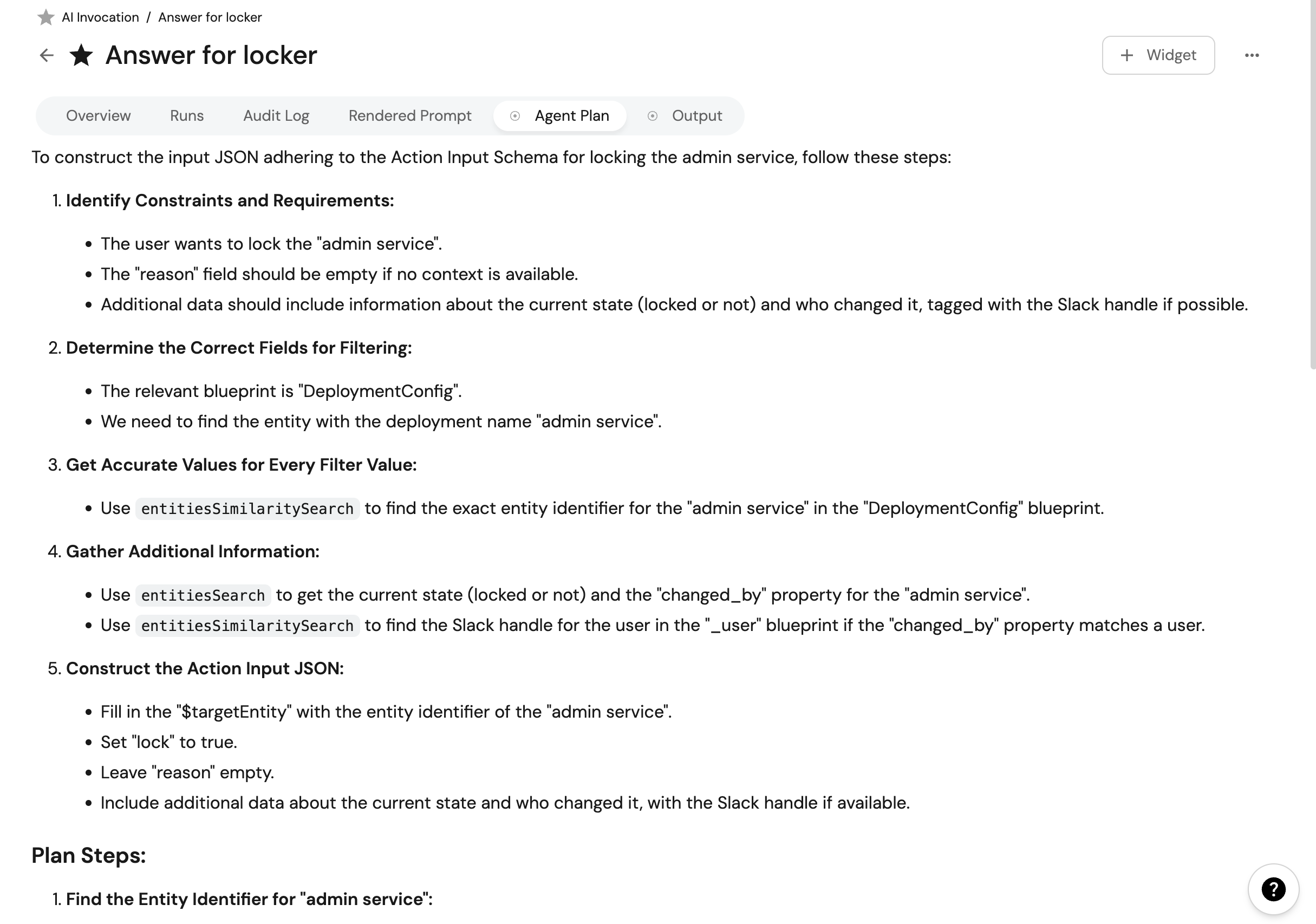

Plan

The plan shows how the agent decided to tackle your request and the steps it intended to take. This provides insight into the agent's reasoning process.

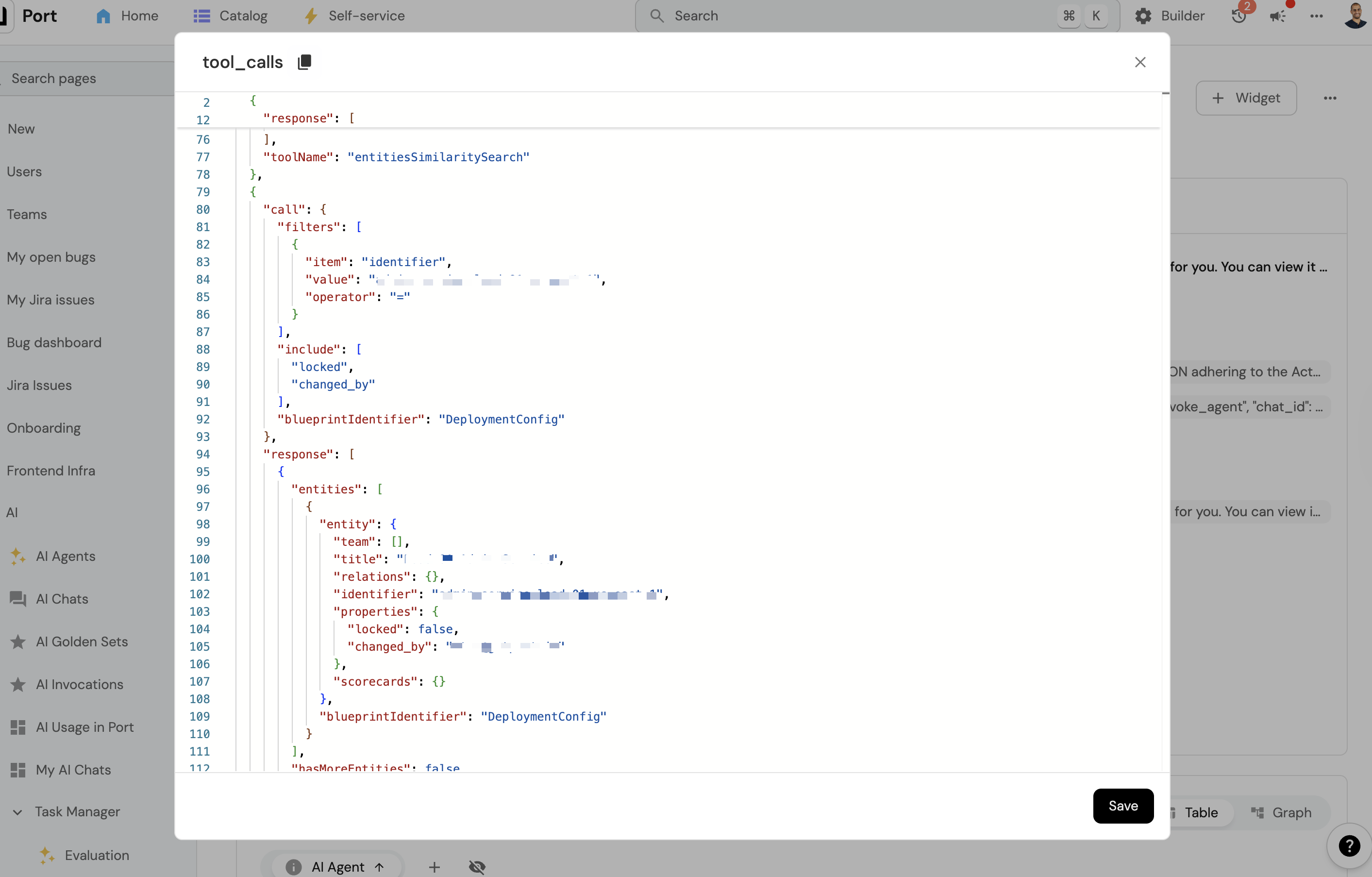

Tools used

This section displays the actual steps the agent took and the APIs it used to complete your request. It can be particularly helpful for debugging when answers don't meet expectations, such as when an agent:

- Used an incorrect field name.

- Chose an inappropriate property.

- Made other logical errors.

Tokens

Each interaction records both input and output tokens, helping you understand your LLM usage. This information can be valuable for:

- Identifying which agents consume more of your token allocation.

- Optimizing prompts for efficiency.

- Managing costs effectively.

Data handling

We store data from your interactions with AI agents for up to 30 days. We use this data to ensure agents function correctly and to identify and prevent problematic or inappropriate AI behavior. We limit this data storage strictly to these purposes. You can contact us to opt-out of this data storage.

Limits

Port applies limits to AI agent interactions to ensure fair usage across all customers:

- Query limit: ~40 queries per hour.

- Token usage limit: 800,000 tokens per hour.

Usage limits may change without prior notice. Once a limit is reached, you will need to wait until it resets.

If you attempt to interact with an agent after reaching a limit, you will receive an error message indicating that the limit has been exceeded.

The query limit is estimated and depends on the actual token usage.

Common errors

Here are some common errors you might encounter when working with AI agents and how to resolve them:

Missing Blueprints Error (Click to expand)

Error message:

{"missingBlueprints":["{{blueprint name}}","{{blueprint name}}"]}

What it means:

This error occurs when an AI agent tries to execute a self-service action that requires selecting entities from specific blueprints, but the agent doesn't have access to those blueprints.

How to fix:

Add the missing blueprints listed in the error message to the agent's configuration.

Security considerations

AI agent interactions in Port are designed with security and privacy as a priority.

For more information on security and data handling, see our AI agents overview.

Troubleshooting & FAQ

The agent is taking long to respond (Click to expand)

Depending on the agent definition and task, as well as load on the system, it may take a few seconds for the agent to respond. Response times between 20 to 40 seconds are acceptable and expected.

If responses consistently take longer than this, consider:

- Checking the details of the invocation.

- Reaching out to our Support if you feel something is not right.

How can I interact with the agent? (Click to expand)

Currently, you can interact with Port AI agents through:

- The AI agent widget in the dashboards.

- Slack integration.

- API integration.

We're working on adding direct interaction through the Port UI in the future.

What can I ask the agent? (Click to expand)

Each agent has optional conversation starters to help you understand what it can help with. The questions you can ask depend on which agents were built in your organization.

For information on building agents with specific capabilities, see our Build an AI agent guide.

What happens if there is no agent that can answer my question? (Click to expand)

If no agent in your organization has the knowledge or capabilities to answer your question, you'll receive a response mentioning that the agent can't assist you with your query.

The agent is getting it wrong and has incorrect answers (Click to expand)

AI agents can make mistakes. If you're receiving incorrect answers:

- Analyze the tools and plan the agent used (visible in the invocation details).

- Consider improving the agent's prompt to better guide its responses.

- Try rephrasing your question or breaking it into smaller, more specific queries.

- Reach out to our support for additional assistance if problems persist.

Remember that AI agents are constantly learning and improving, but they're not infallible.

My agent isn't responding in Slack (Click to expand)

Ensure that:

- The Port Slack app is properly installed in your workspace.

- The app has been invited to the channel where you're mentioning it.

- You're correctly mentioning the app (@Port).

- You've completed the authentication flow with the app.

- You haven't exceeded your daily usage limits.

How can I provide feedback on agent responses? (Click to expand)

The AI invocation entity contains the feedback property where you can mark is as Negative or Positive. We're working on adding a more convenient way to rate conversation from Slack and from the UI.

How is my data with AI agents handled? (Click to expand)

We store data from your interactions with AI agents for up to 30 days. We use this data to ensure agents function correctly and to identify and prevent problematic or inappropriate AI behavior. We limit this data storage strictly to these purposes. You can contact us to opt-out of this data storage.